AI 工具包中的跟踪

AI 工具包提供跟踪功能,帮助您监控和分析 AI 应用程序的性能。您可以跟踪 AI 应用程序的执行情况,包括与生成式 AI 模型的交互,从而深入了解其行为和性能。

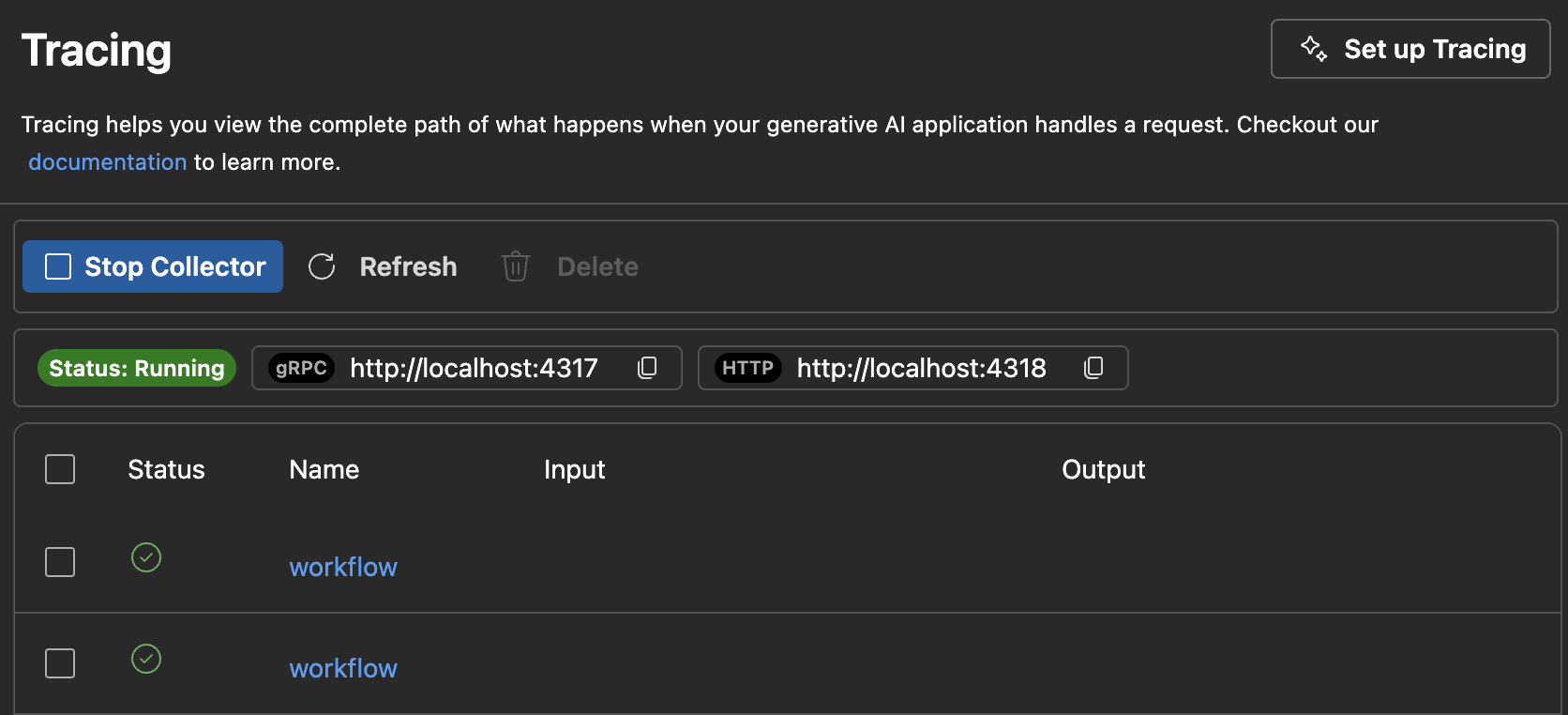

AI 工具包托管本地 HTTP 和 gRPC 服务器以收集跟踪数据。收集器服务器与 OTLP(OpenTelemetry 协议)兼容,大多数语言模型 SDK 都直接支持 OTLP,或者有非 Microsoft 的检测库来支持它。使用 AI 工具包可视化收集的检测数据。

所有支持 OTLP 并遵循 生成式 AI 系统的语义约定的框架或 SDK 都受支持。下表包含经过兼容性测试的常用 AI SDK。

| Azure AI 推理 | Foundry Agent Service | Anthropic | Gemini | LangChain | OpenAI SDK 3 | OpenAI Agents SDK | |

|---|---|---|---|---|---|---|---|

| Python | ✅ | ✅ | ✅ (traceloop,monocle)1,2 | ✅ (monocle) | ✅ (LangSmith,monocle)1,2 | ✅ (opentelemetry-python-contrib,monocle)1 | ✅ (Logfire,monocle)1,2 |

| TS/JS | ✅ | ✅ | ✅ (traceloop)1,2 | ❌ | ✅ (traceloop)1,2 | ✅ (traceloop)1,2 | ❌ |

如何开始跟踪

-

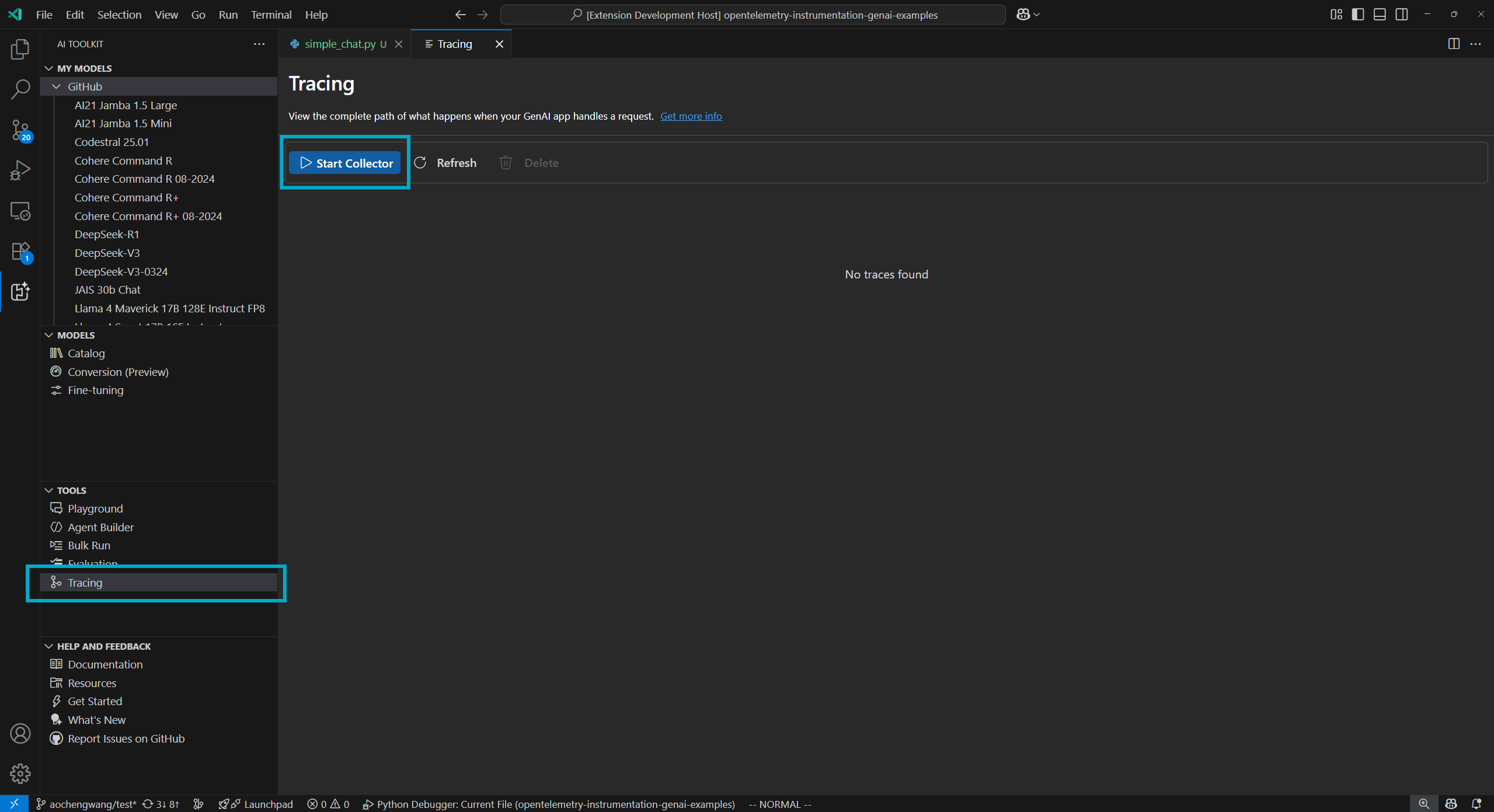

通过在树状视图中选择“**跟踪**”来打开跟踪 Web 视图。

-

选择“**启动收集器**”按钮以启动本地 OTLP 跟踪收集器服务器。

-

使用代码片段启用检测。有关不同语言和 SDK 的代码片段,请参阅“设置检测”部分。

-

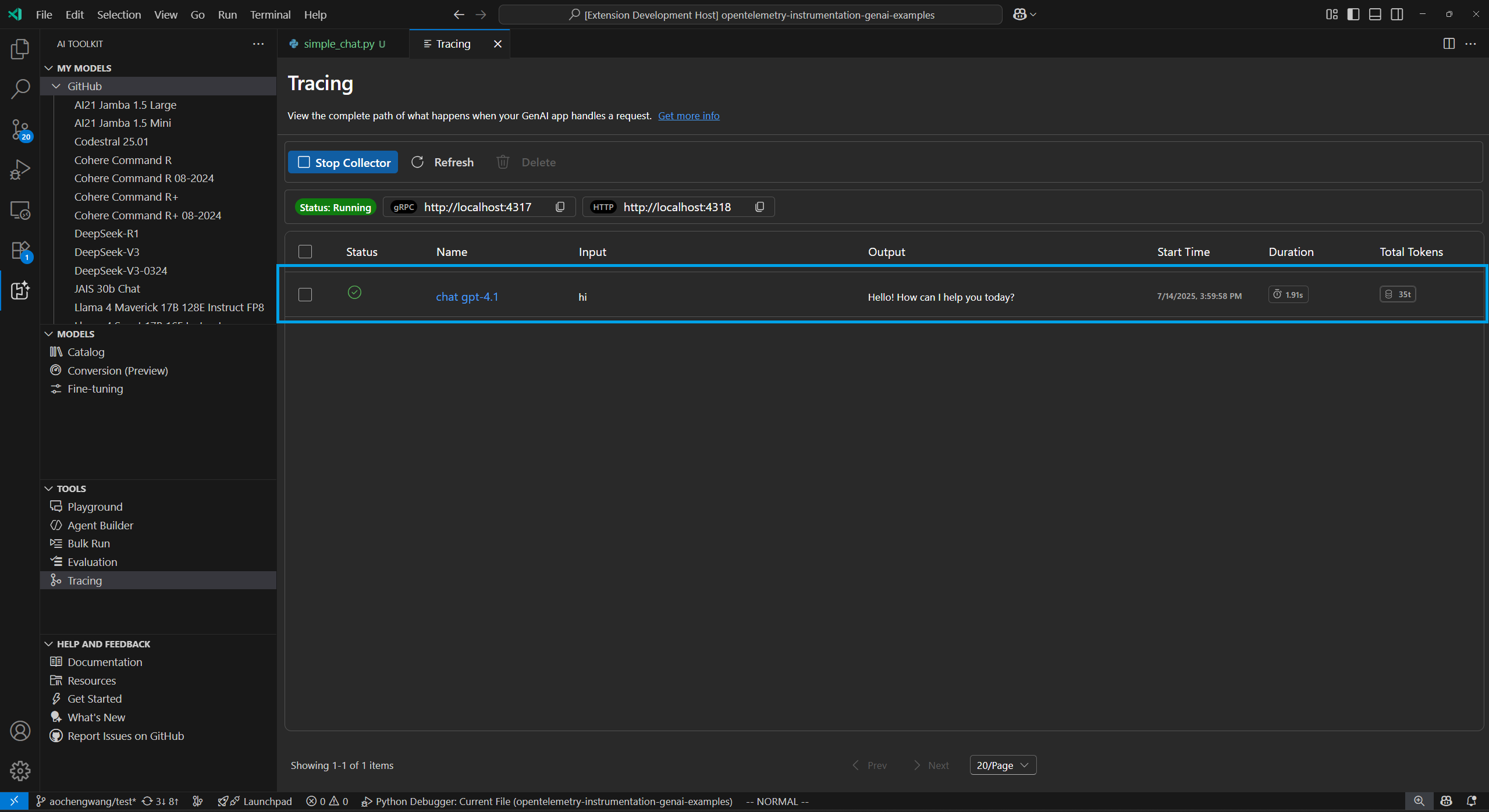

通过运行应用程序生成跟踪数据。

-

在跟踪 Web 视图中,选择“**刷新**”按钮以查看新的跟踪数据。

设置检测

在 AI 应用程序中设置跟踪以收集跟踪数据。以下代码片段展示了如何为不同的 SDK 和语言设置跟踪。

所有 SDK 的过程相似。

- 向 LLM 或代理应用程序添加跟踪。

- 设置 OTLP 跟踪导出器以使用 AITK 本地收集器。

Azure AI 推理 SDK - Python

安装

pip install opentelemetry-sdk opentelemetry-exporter-otlp-proto-http azure-ai-inference[opentelemetry]

设置

import os

os.environ["AZURE_TRACING_GEN_AI_CONTENT_RECORDING_ENABLED"] = "true"

os.environ["AZURE_SDK_TRACING_IMPLEMENTATION"] = "opentelemetry"

from opentelemetry import trace, _events

from opentelemetry.sdk.resources import Resource

from opentelemetry.sdk.trace import TracerProvider

from opentelemetry.sdk.trace.export import BatchSpanProcessor

from opentelemetry.sdk._logs import LoggerProvider

from opentelemetry.sdk._logs.export import BatchLogRecordProcessor

from opentelemetry.exporter.otlp.proto.http.trace_exporter import OTLPSpanExporter

from opentelemetry.sdk._events import EventLoggerProvider

from opentelemetry.exporter.otlp.proto.http._log_exporter import OTLPLogExporter

resource = Resource(attributes={

"service.name": "opentelemetry-instrumentation-azure-ai-agents"

})

provider = TracerProvider(resource=resource)

otlp_exporter = OTLPSpanExporter(

endpoint="https://:4318/v1/traces",

)

processor = BatchSpanProcessor(otlp_exporter)

provider.add_span_processor(processor)

trace.set_tracer_provider(provider)

logger_provider = LoggerProvider(resource=resource)

logger_provider.add_log_record_processor(

BatchLogRecordProcessor(OTLPLogExporter(endpoint="https://:4318/v1/logs"))

)

_events.set_event_logger_provider(EventLoggerProvider(logger_provider))

from azure.ai.inference.tracing import AIInferenceInstrumentor

AIInferenceInstrumentor().instrument(True)

Azure AI 推理 SDK - TypeScript/JavaScript

安装

npm install @azure/opentelemetry-instrumentation-azure-sdk @opentelemetry/api @opentelemetry/exporter-trace-otlp-proto @opentelemetry/instrumentation @opentelemetry/resources @opentelemetry/sdk-trace-node

设置

const { context } = require('@opentelemetry/api');

const { resourceFromAttributes } = require('@opentelemetry/resources');

const {

NodeTracerProvider,

SimpleSpanProcessor

} = require('@opentelemetry/sdk-trace-node');

const { OTLPTraceExporter } = require('@opentelemetry/exporter-trace-otlp-proto');

const exporter = new OTLPTraceExporter({

url: 'https://:4318/v1/traces'

});

const provider = new NodeTracerProvider({

resource: resourceFromAttributes({

'service.name': 'opentelemetry-instrumentation-azure-ai-inference'

}),

spanProcessors: [new SimpleSpanProcessor(exporter)]

});

provider.register();

const { registerInstrumentations } = require('@opentelemetry/instrumentation');

const {

createAzureSdkInstrumentation

} = require('@azure/opentelemetry-instrumentation-azure-sdk');

registerInstrumentations({

instrumentations: [createAzureSdkInstrumentation()]

});

Foundry Agent Service - Python

安装

pip install opentelemetry-sdk opentelemetry-exporter-otlp-proto-http azure-ai-inference[opentelemetry]

设置

import os

os.environ["AZURE_TRACING_GEN_AI_CONTENT_RECORDING_ENABLED"] = "true"

os.environ["AZURE_SDK_TRACING_IMPLEMENTATION"] = "opentelemetry"

from opentelemetry import trace, _events

from opentelemetry.sdk.resources import Resource

from opentelemetry.sdk.trace import TracerProvider

from opentelemetry.sdk.trace.export import BatchSpanProcessor

from opentelemetry.sdk._logs import LoggerProvider

from opentelemetry.sdk._logs.export import BatchLogRecordProcessor

from opentelemetry.exporter.otlp.proto.http.trace_exporter import OTLPSpanExporter

from opentelemetry.sdk._events import EventLoggerProvider

from opentelemetry.exporter.otlp.proto.http._log_exporter import OTLPLogExporter

resource = Resource(attributes={

"service.name": "opentelemetry-instrumentation-azure-ai-agents"

})

provider = TracerProvider(resource=resource)

otlp_exporter = OTLPSpanExporter(

endpoint="https://:4318/v1/traces",

)

processor = BatchSpanProcessor(otlp_exporter)

provider.add_span_processor(processor)

trace.set_tracer_provider(provider)

logger_provider = LoggerProvider(resource=resource)

logger_provider.add_log_record_processor(

BatchLogRecordProcessor(OTLPLogExporter(endpoint="https://:4318/v1/logs"))

)

_events.set_event_logger_provider(EventLoggerProvider(logger_provider))

from azure.ai.agents.telemetry import AIAgentsInstrumentor

AIAgentsInstrumentor().instrument(True)

Foundry Agent Service - TypeScript/JavaScript

安装

npm install @azure/opentelemetry-instrumentation-azure-sdk @opentelemetry/api @opentelemetry/exporter-trace-otlp-proto @opentelemetry/instrumentation @opentelemetry/resources @opentelemetry/sdk-trace-node

设置

const { context } = require('@opentelemetry/api');

const { resourceFromAttributes } = require('@opentelemetry/resources');

const {

NodeTracerProvider,

SimpleSpanProcessor

} = require('@opentelemetry/sdk-trace-node');

const { OTLPTraceExporter } = require('@opentelemetry/exporter-trace-otlp-proto');

const exporter = new OTLPTraceExporter({

url: 'https://:4318/v1/traces'

});

const provider = new NodeTracerProvider({

resource: resourceFromAttributes({

'service.name': 'opentelemetry-instrumentation-azure-ai-inference'

}),

spanProcessors: [new SimpleSpanProcessor(exporter)]

});

provider.register();

const { registerInstrumentations } = require('@opentelemetry/instrumentation');

const {

createAzureSdkInstrumentation

} = require('@azure/opentelemetry-instrumentation-azure-sdk');

registerInstrumentations({

instrumentations: [createAzureSdkInstrumentation()]

});

Anthropic - Python

OpenTelemetry

安装

pip install opentelemetry-sdk opentelemetry-exporter-otlp-proto-http opentelemetry-instrumentation-anthropic

设置

from opentelemetry import trace, _events

from opentelemetry.sdk.resources import Resource

from opentelemetry.sdk.trace import TracerProvider

from opentelemetry.sdk.trace.export import BatchSpanProcessor

from opentelemetry.sdk._logs import LoggerProvider

from opentelemetry.sdk._logs.export import BatchLogRecordProcessor

from opentelemetry.exporter.otlp.proto.http.trace_exporter import OTLPSpanExporter

from opentelemetry.sdk._events import EventLoggerProvider

from opentelemetry.exporter.otlp.proto.http._log_exporter import OTLPLogExporter

resource = Resource(attributes={

"service.name": "opentelemetry-instrumentation-anthropic-traceloop"

})

provider = TracerProvider(resource=resource)

otlp_exporter = OTLPSpanExporter(

endpoint="https://:4318/v1/traces",

)

processor = BatchSpanProcessor(otlp_exporter)

provider.add_span_processor(processor)

trace.set_tracer_provider(provider)

logger_provider = LoggerProvider(resource=resource)

logger_provider.add_log_record_processor(

BatchLogRecordProcessor(OTLPLogExporter(endpoint="https://:4318/v1/logs"))

)

_events.set_event_logger_provider(EventLoggerProvider(logger_provider))

from opentelemetry.instrumentation.anthropic import AnthropicInstrumentor

AnthropicInstrumentor().instrument()

Monocle

安装

pip install opentelemetry-sdk opentelemetry-exporter-otlp-proto-http monocle_apptrace

设置

from opentelemetry.sdk.trace.export import BatchSpanProcessor

from opentelemetry.exporter.otlp.proto.http.trace_exporter import OTLPSpanExporter

# Import monocle_apptrace

from monocle_apptrace import setup_monocle_telemetry

# Setup Monocle telemetry with OTLP span exporter for traces

setup_monocle_telemetry(

workflow_name="opentelemetry-instrumentation-anthropic",

span_processors=[

BatchSpanProcessor(

OTLPSpanExporter(endpoint="https://:4318/v1/traces")

)

]

)

Anthropic - TypeScript/JavaScript

安装

npm install @traceloop/node-server-sdk

设置

const { initialize } = require('@traceloop/node-server-sdk');

const { trace } = require('@opentelemetry/api');

initialize({

appName: 'opentelemetry-instrumentation-anthropic-traceloop',

baseUrl: 'https://:4318',

disableBatch: true

});

Google Gemini - Python

OpenTelemetry

安装

pip install opentelemetry-sdk opentelemetry-exporter-otlp-proto-http opentelemetry-instrumentation-google-genai

设置

from opentelemetry import trace, _events

from opentelemetry.sdk.resources import Resource

from opentelemetry.sdk.trace import TracerProvider

from opentelemetry.sdk.trace.export import BatchSpanProcessor

from opentelemetry.sdk._logs import LoggerProvider

from opentelemetry.sdk._logs.export import BatchLogRecordProcessor

from opentelemetry.exporter.otlp.proto.http.trace_exporter import OTLPSpanExporter

from opentelemetry.sdk._events import EventLoggerProvider

from opentelemetry.exporter.otlp.proto.http._log_exporter import OTLPLogExporter

resource = Resource(attributes={

"service.name": "opentelemetry-instrumentation-google-genai"

})

provider = TracerProvider(resource=resource)

otlp_exporter = OTLPSpanExporter(

endpoint="https://:4318/v1/traces",

)

processor = BatchSpanProcessor(otlp_exporter)

provider.add_span_processor(processor)

trace.set_tracer_provider(provider)

logger_provider = LoggerProvider(resource=resource)

logger_provider.add_log_record_processor(

BatchLogRecordProcessor(OTLPLogExporter(endpoint="https://:4318/v1/logs"))

)

_events.set_event_logger_provider(EventLoggerProvider(logger_provider))

from opentelemetry.instrumentation.google_genai import GoogleGenAiSdkInstrumentor

GoogleGenAiSdkInstrumentor().instrument(enable_content_recording=True)

Monocle

安装

pip install opentelemetry-sdk opentelemetry-exporter-otlp-proto-http monocle_apptrace

设置

from opentelemetry.sdk.trace.export import BatchSpanProcessor

from opentelemetry.exporter.otlp.proto.http.trace_exporter import OTLPSpanExporter

# Import monocle_apptrace

from monocle_apptrace import setup_monocle_telemetry

# Setup Monocle telemetry with OTLP span exporter for traces

setup_monocle_telemetry(

workflow_name="opentelemetry-instrumentation-google-genai",

span_processors=[

BatchSpanProcessor(

OTLPSpanExporter(endpoint="https://:4318/v1/traces")

)

]

)

LangChain - Python

LangSmith

安装

pip install langsmith[otel]

设置

import os

os.environ["LANGSMITH_OTEL_ENABLED"] = "true"

os.environ["LANGSMITH_TRACING"] = "true"

os.environ["OTEL_EXPORTER_OTLP_ENDPOINT"] = "https://:4318"

Monocle

安装

pip install opentelemetry-sdk opentelemetry-exporter-otlp-proto-http monocle_apptrace

设置

from opentelemetry.sdk.trace.export import BatchSpanProcessor

from opentelemetry.exporter.otlp.proto.http.trace_exporter import OTLPSpanExporter

# Import monocle_apptrace

from monocle_apptrace import setup_monocle_telemetry

# Setup Monocle telemetry with OTLP span exporter for traces

setup_monocle_telemetry(

workflow_name="opentelemetry-instrumentation-langchain",

span_processors=[

BatchSpanProcessor(

OTLPSpanExporter(endpoint="https://:4318/v1/traces")

)

]

)

LangChain - TypeScript/JavaScript

安装

npm install @traceloop/node-server-sdk

设置

const { initialize } = require('@traceloop/node-server-sdk');

initialize({

appName: 'opentelemetry-instrumentation-langchain-traceloop',

baseUrl: 'https://:4318',

disableBatch: true

});

OpenAI - Python

OpenTelemetry

安装

pip install opentelemetry-sdk opentelemetry-exporter-otlp-proto-http opentelemetry-instrumentation-openai-v2

设置

from opentelemetry import trace, _events

from opentelemetry.sdk.resources import Resource

from opentelemetry.sdk.trace import TracerProvider

from opentelemetry.sdk.trace.export import BatchSpanProcessor

from opentelemetry.sdk._logs import LoggerProvider

from opentelemetry.sdk._logs.export import BatchLogRecordProcessor

from opentelemetry.exporter.otlp.proto.http.trace_exporter import OTLPSpanExporter

from opentelemetry.sdk._events import EventLoggerProvider

from opentelemetry.exporter.otlp.proto.http._log_exporter import OTLPLogExporter

from opentelemetry.instrumentation.openai_v2 import OpenAIInstrumentor

import os

os.environ["OTEL_INSTRUMENTATION_GENAI_CAPTURE_MESSAGE_CONTENT"] = "true"

# Set up resource

resource = Resource(attributes={

"service.name": "opentelemetry-instrumentation-openai"

})

# Create tracer provider

trace.set_tracer_provider(TracerProvider(resource=resource))

# Configure OTLP exporter

otlp_exporter = OTLPSpanExporter(

endpoint="https://:4318/v1/traces"

)

# Add span processor

trace.get_tracer_provider().add_span_processor(

BatchSpanProcessor(otlp_exporter)

)

# Set up logger provider

logger_provider = LoggerProvider(resource=resource)

logger_provider.add_log_record_processor(

BatchLogRecordProcessor(OTLPLogExporter(endpoint="https://:4318/v1/logs"))

)

_events.set_event_logger_provider(EventLoggerProvider(logger_provider))

# Enable OpenAI instrumentation

OpenAIInstrumentor().instrument()

Monocle

安装

pip install opentelemetry-sdk opentelemetry-exporter-otlp-proto-http monocle_apptrace

设置

from opentelemetry.sdk.trace.export import BatchSpanProcessor

from opentelemetry.exporter.otlp.proto.http.trace_exporter import OTLPSpanExporter

# Import monocle_apptrace

from monocle_apptrace import setup_monocle_telemetry

# Setup Monocle telemetry with OTLP span exporter for traces

setup_monocle_telemetry(

workflow_name="opentelemetry-instrumentation-openai",

span_processors=[

BatchSpanProcessor(

OTLPSpanExporter(endpoint="https://:4318/v1/traces")

)

]

)

OpenAI - TypeScript/JavaScript

安装

npm install @traceloop/instrumentation-openai @traceloop/node-server-sdk

设置

const { initialize } = require('@traceloop/node-server-sdk');

initialize({

appName: 'opentelemetry-instrumentation-openai-traceloop',

baseUrl: 'https://:4318',

disableBatch: true

});

OpenAI Agents SDK - Python

Logfire

安装

pip install logfire

设置

import logfire

import os

os.environ["OTEL_EXPORTER_OTLP_TRACES_ENDPOINT"] = "https://:4318/v1/traces"

logfire.configure(

service_name="opentelemetry-instrumentation-openai-agents-logfire",

send_to_logfire=False,

)

logfire.instrument_openai_agents()

Monocle

安装

pip install opentelemetry-sdk opentelemetry-exporter-otlp-proto-http monocle_apptrace

设置

from opentelemetry.sdk.trace.export import BatchSpanProcessor

from opentelemetry.exporter.otlp.proto.http.trace_exporter import OTLPSpanExporter

# Import monocle_apptrace

from monocle_apptrace import setup_monocle_telemetry

# Setup Monocle telemetry with OTLP span exporter for traces

setup_monocle_telemetry(

workflow_name="opentelemetry-instrumentation-openai-agents",

span_processors=[

BatchSpanProcessor(

OTLPSpanExporter(endpoint="https://:4318/v1/traces")

)

]

)

示例 1:使用 Opentelemetry 通过 Azure AI 推理 SDK 设置跟踪

以下端到端示例使用 Python 中的 Azure AI 推理 SDK,并展示了如何设置跟踪提供程序和检测。

先决条件

要运行此示例,您需要以下先决条件:

设置您的开发环境

使用以下说明部署一个预配置的开发环境,其中包含运行此示例所需的所有依赖项。

-

设置 GitHub 个人访问令牌

使用免费的 GitHub Models 作为示例模型。

打开 GitHub 开发人员设置并选择“**生成新令牌**”。

重要令牌需要

models:read权限,否则将返回未经授权。令牌将发送到 Microsoft 服务。 -

创建环境变量

创建一个环境变量,使用以下代码片段之一将您的令牌设置为客户端代码的密钥。将

<your-github-token-goes-here>替换为您实际的 GitHub 令牌。bash

export GITHUB_TOKEN="<your-github-token-goes-here>"powershell

$Env:GITHUB_TOKEN="<your-github-token-goes-here>"Windows 命令提示符

set GITHUB_TOKEN=<your-github-token-goes-here> -

安装 Python 包

以下命令将安装使用 Azure AI 推理 SDK 进行跟踪所需的 Python 包。

pip install opentelemetry-sdk opentelemetry-exporter-otlp-proto-http azure-ai-inference[opentelemetry] -

设置跟踪

-

在计算机上为项目创建一个新的本地目录。

mkdir my-tracing-app -

导航到您创建的目录。

cd my-tracing-app -

在该目录中打开 Visual Studio Code。

code .

-

-

创建 Python 文件

-

在

my-tracing-app目录中,创建一个名为main.py的 Python 文件。您将在此处添加设置跟踪和与 Azure AI 推理 SDK 交互的代码。

-

将以下代码添加到

main.py并保存文件。import os ### Set up for OpenTelemetry tracing ### os.environ["AZURE_TRACING_GEN_AI_CONTENT_RECORDING_ENABLED"] = "true" os.environ["AZURE_SDK_TRACING_IMPLEMENTATION"] = "opentelemetry" from opentelemetry import trace, _events from opentelemetry.sdk.resources import Resource from opentelemetry.sdk.trace import TracerProvider from opentelemetry.sdk.trace.export import BatchSpanProcessor from opentelemetry.sdk._logs import LoggerProvider from opentelemetry.sdk._logs.export import BatchLogRecordProcessor from opentelemetry.exporter.otlp.proto.http.trace_exporter import OTLPSpanExporter from opentelemetry.sdk._events import EventLoggerProvider from opentelemetry.exporter.otlp.proto.http._log_exporter import OTLPLogExporter github_token = os.environ["GITHUB_TOKEN"] resource = Resource(attributes={ "service.name": "opentelemetry-instrumentation-azure-ai-inference" }) provider = TracerProvider(resource=resource) otlp_exporter = OTLPSpanExporter( endpoint="https://:4318/v1/traces", ) processor = BatchSpanProcessor(otlp_exporter) provider.add_span_processor(processor) trace.set_tracer_provider(provider) logger_provider = LoggerProvider(resource=resource) logger_provider.add_log_record_processor( BatchLogRecordProcessor(OTLPLogExporter(endpoint="https://:4318/v1/logs")) ) _events.set_event_logger_provider(EventLoggerProvider(logger_provider)) from azure.ai.inference.tracing import AIInferenceInstrumentor AIInferenceInstrumentor().instrument() ### Set up for OpenTelemetry tracing ### from azure.ai.inference import ChatCompletionsClient from azure.ai.inference.models import UserMessage from azure.ai.inference.models import TextContentItem from azure.core.credentials import AzureKeyCredential client = ChatCompletionsClient( endpoint = "https://models.inference.ai.azure.com", credential = AzureKeyCredential(github_token), api_version = "2024-08-01-preview", ) response = client.complete( messages = [ UserMessage(content = [ TextContentItem(text = "hi"), ]), ], model = "gpt-4.1", tools = [], response_format = "text", temperature = 1, top_p = 1, ) print(response.choices[0].message.content)

-

-

运行代码

-

在 Visual Studio Code 中打开一个新终端。

-

在终端中,使用命令

python main.py运行代码。

-

-

在 AI 工具包中检查跟踪数据

运行代码并刷新跟踪 Web 视图后,列表中会出现一个新的跟踪。

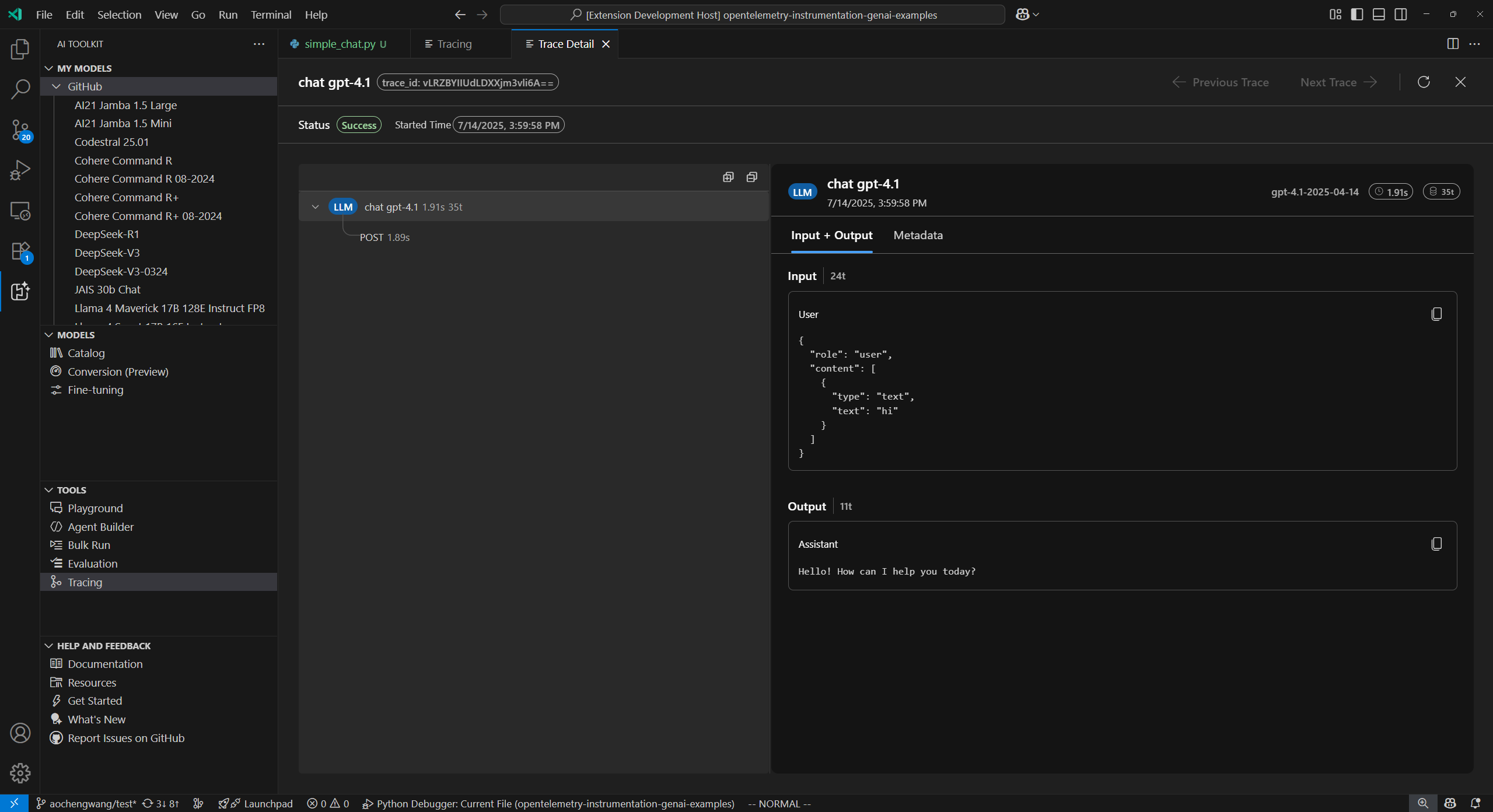

选择跟踪以打开跟踪详细信息 Web 视图。

在左侧的 span 树状视图中查看应用程序的完整执行流程。

选择右侧的 span 详细信息视图中的 span,然后在“**输入 + 输出**”选项卡中查看生成式 AI 消息。

选择“**元数据**”选项卡以查看原始元数据。

示例 2:使用 Monocle 通过 OpenAI Agents SDK 设置跟踪

以下端到端示例在 Python 中使用 OpenAI Agents SDK 和 Monocle,并展示了如何为多代理旅行预订系统设置跟踪。

先决条件

要运行此示例,您需要以下先决条件:

- Visual Studio Code

- AI 工具包扩展

- Okahu Trace Visualizer

- OpenAI Agents SDK

- OpenTelemetry

- Monocle

- Python 最新版本

- OpenAI API 密钥

设置您的开发环境

使用以下说明部署一个预配置的开发环境,其中包含运行此示例所需的所有依赖项。

-

创建环境变量

使用以下代码片段之一为您的 OpenAI API 密钥创建环境变量。将

<your-openai-api-key>替换为您实际的 OpenAI API 密钥。bash

export OPENAI_API_KEY="<your-openai-api-key>"powershell

$Env:OPENAI_API_KEY="<your-openai-api-key>"Windows 命令提示符

set OPENAI_API_KEY=<your-openai-api-key>或者,在您的项目目录中创建一个

.env文件。OPENAI_API_KEY=<your-openai-api-key> -

安装 Python 包

创建具有以下内容的

requirements.txt文件。opentelemetry-sdk opentelemetry-exporter-otlp-proto-http monocle_apptrace openai-agents python-dotenv使用以下命令安装包:

pip install -r requirements.txt -

设置跟踪

-

在计算机上为项目创建一个新的本地目录。

mkdir my-agents-tracing-app -

导航到您创建的目录。

cd my-agents-tracing-app -

在该目录中打开 Visual Studio Code。

code .

-

-

创建 Python 文件

-

在

my-agents-tracing-app目录中,创建一个名为main.py的 Python 文件。您将在此处添加使用 Monocle 设置跟踪和与 OpenAI Agents SDK 交互的代码。

-

将以下代码添加到

main.py并保存文件。import os from dotenv import load_dotenv # Load environment variables from .env file load_dotenv() from opentelemetry.sdk.trace.export import BatchSpanProcessor from opentelemetry.exporter.otlp.proto.http.trace_exporter import OTLPSpanExporter # Import monocle_apptrace from monocle_apptrace import setup_monocle_telemetry # Setup Monocle telemetry with OTLP span exporter for traces setup_monocle_telemetry( workflow_name="opentelemetry-instrumentation-openai-agents", span_processors=[ BatchSpanProcessor( OTLPSpanExporter(endpoint="https://:4318/v1/traces") ) ] ) from agents import Agent, Runner, function_tool # Define tool functions @function_tool def book_flight(from_airport: str, to_airport: str) -> str: """Book a flight between airports.""" return f"Successfully booked a flight from {from_airport} to {to_airport} for 100 USD." @function_tool def book_hotel(hotel_name: str, city: str) -> str: """Book a hotel reservation.""" return f"Successfully booked a stay at {hotel_name} in {city} for 50 USD." @function_tool def get_weather(city: str) -> str: """Get weather information for a city.""" return f"The weather in {city} is sunny and 75°F." # Create specialized agents flight_agent = Agent( name="Flight Agent", instructions="You are a flight booking specialist. Use the book_flight tool to book flights.", tools=[book_flight], ) hotel_agent = Agent( name="Hotel Agent", instructions="You are a hotel booking specialist. Use the book_hotel tool to book hotels.", tools=[book_hotel], ) weather_agent = Agent( name="Weather Agent", instructions="You are a weather information specialist. Use the get_weather tool to provide weather information.", tools=[get_weather], ) # Create a coordinator agent with tools coordinator = Agent( name="Travel Coordinator", instructions="You are a travel coordinator. Delegate flight bookings to the Flight Agent, hotel bookings to the Hotel Agent, and weather queries to the Weather Agent.", tools=[ flight_agent.as_tool( tool_name="flight_expert", tool_description="Handles flight booking questions and requests.", ), hotel_agent.as_tool( tool_name="hotel_expert", tool_description="Handles hotel booking questions and requests.", ), weather_agent.as_tool( tool_name="weather_expert", tool_description="Handles weather information questions and requests.", ), ], ) # Run the multi-agent workflow if __name__ == "__main__": import asyncio result = asyncio.run( Runner.run( coordinator, "Book me a flight today from SEA to SFO, then book the best hotel there and tell me the weather.", ) ) print(result.final_output)

-

-

运行代码

-

在 Visual Studio Code 中打开一个新终端。

-

在终端中,使用命令

python main.py运行代码。

-

-

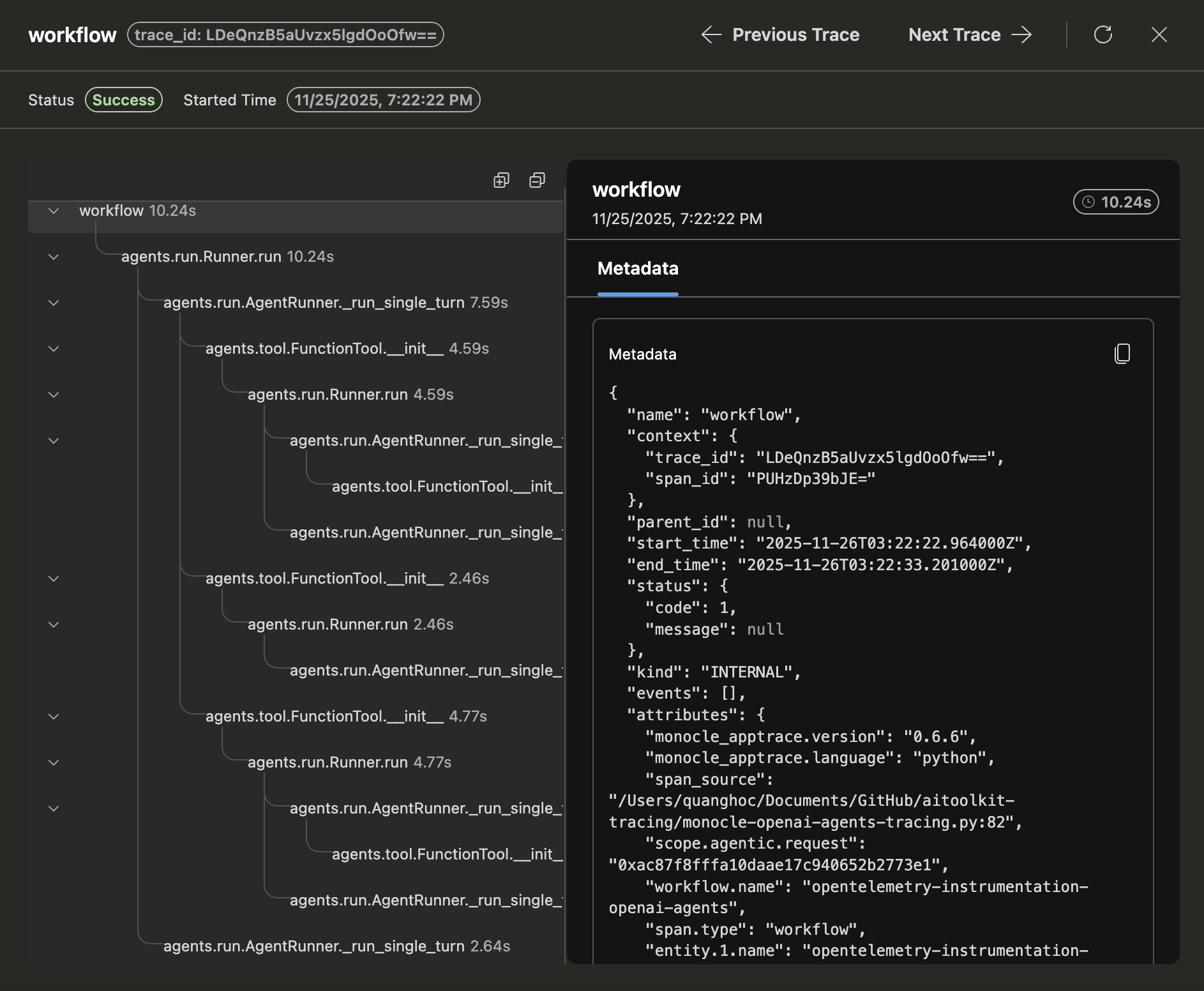

在 AI 工具包中检查跟踪数据

运行代码并刷新跟踪 Web 视图后,列表中会出现一个新的跟踪。

选择跟踪以打开跟踪详细信息 Web 视图。

在左侧的 span 树状视图中,查看应用程序的完整执行流程,包括代理调用、工具调用和代理委托。

选择右侧的 span 详细信息视图中的 span,然后在“**输入 + 输出**”选项卡中查看生成式 AI 消息。

选择“**元数据**”选项卡以查看原始元数据。

您学到了什么

在本文中,您学习了如何

- 使用 Azure AI 推理 SDK 和 OpenTelemetry 在 AI 应用程序中设置跟踪。

- 配置 OTLP 跟踪导出器,将跟踪数据发送到本地收集器服务器。

- 运行您的应用程序以生成跟踪数据,并在 AI 工具包 Web 视图中查看跟踪。

- 使用多种 SDK 和语言(包括 Python 和 TypeScript/JavaScript)以及通过 OTLP 的非 Microsoft 工具来使用跟踪功能。

- 使用提供的代码片段检测各种 AI 框架(Anthropic、Gemini、LangChain、OpenAI 等)。

- 使用跟踪 Web 视图 UI,包括“**启动收集器**”和“**刷新**”按钮来管理跟踪数据。

- 设置您的开发环境,包括环境变量和包安装,以启用跟踪。

- 使用 span 树状图和详细信息视图分析应用程序的执行流程,包括生成式 AI 消息流程和元数据。